"A civilization without instrumentalities? Incredible." --Forbidden Planet

Although it often seems that computers and communication devices have been growing smaller and smaller with no end in sight, for some purposes, they are still far too intrusive and unintuitive. There are many workplace scenarios where data and communication services would be very beneficial as performance support systems, but the constraints and awkwardness of existing computer interfaces would interfere with the task at hand.

Fortunately, researchers have been working steadily for decades to make computers and communication devices nearly vanish by embedding them into our surroundings and networking them so they can sense the environment and interact with us in a manner that would integrate better with our living and working situations.

Early research groups developed scenarios to demonstrate the utility of these systems, which included independence support for the elderly, meeting facilitation, augmented driving and enhanced social interaction.

Ads by Google

Posted by ellen at September 07, 2009 09:51 AM

History: The promise of Smart Ecosystems and Ambient Intelligence

In 2008, the working group on Ambient Computing from the European technology consortium InterLink published a paper summarizing the state of the art in Ambient Computing, focusing on the social awareness of systems and privacy concerns. Included in this paper are a history of projects and use-case scenarios and a description of the original vision of ubiquitous, pervasive networks based on many "invisible" small computing devices embedded into the environment. These "smart ecosystems" of devices were to provide an intuitive user experience, enabling new types of interaction, communication and collaboration:

"...the degree of diffusion of smart devices...will result in smart ecosystems that might parallel other ecosystems in the not too far future.

... a major challenge will be to orchestrate the large number of individual elements and their relationships, connect and combine them via different types of communication networks to higher level, aggregated entities and investigate their emerging behaviour."

Similarly, an MIT project called Oxygen, working around 2000-2003, focused on Intelligent Spaces, which would sense the presence of people, their tasks, and even their attention and react appropriately, and Mobile Devices, which presaged today's smart phones to create connections to the physical world through a cluster of technologies, like cameras, sensors, networking, accelerometer, microphone, speaker, phone, GPS, etc.

Ambient Computing Scenarios

- Ambient Agoras (from the Disappearing Computer Initiative)

"Ambient Agoras" was a project which aimed to provide situated services and turn everyday places into social marketplaces ('agoras') of ideas and information by using innovative Information and communication technologies and devices embedded in the home and office environments. The services would be facilitated by smart artifacts that enable users to communicate for help, guidance and fun.Examples of smart artifacts include the HelloWall, a wall-size (but somewhat primitive) large ambient display that communicates with a sort of flexible dot-based code based on who is nearby, the ViewPort, a mobile handheld device which can communicate with other items in the room, including the HelloWall, and a variety of telepresence devices such as the MirrorSpace and VideoProbe.

- Socially Aware projects such as PERSONA, and NETCARITY which seek to reduce loneliness and isolation among the elderly by including Ambient Assisted Living technologies to maintain contacts with family and friends, provide monitoring and user-friendly access to physical services such as personal care, shopping and housecleaning, provide virtual learning and exercise sessions, and allow community work from a remote location.

- InterLiving, a project funded by the Disappearing Computer Initiative which aims at developing technology in a social environment that contributes to bringing family members together using interactive and intergenerational interfaces.

- RoomWare, a set of interactive furnishings for meeting rooms, including ConnecTables and DynaWalls which allow movement of content data from screen to screen and table to table.

Scenarios from the MIT Oxygen project included: a business conference involving people in different countries coordinating a meeting in London using different languages and automated scheduling and travel planning, as well as navigational and data assistance once they arrive, and a "guardian angel" which allows aging-in-place by providing memory and safety support to elderly people living independently.

Over the last decade, many of these scenarios have been at least partially realized, often through the use of device configurations not precisely forseen by the early ambient computing thinkers, such as smart-phones, GPS's, RFID's, and blue-tooth, as the market determines the dominant technologies. These configurations emphasize embedded devices less at this point and portable devices more, but the end result is the same.

Smart-phones bristling with data inputs from GPS, accelerometers, networking, video cameras, microphones, keyboards, and multi-touch have accelerated the evolution of pervasive computing by allowing mashups between all of these technologies, resulting in what could be called augmented intelligence for daily living.

Tipping points to acceptance of pervasive Performance Support Systems

Embedded technologies would be a natural fit as performance support systems, particularly where use of a keyboard or mouse is inconvenient or impossible. Science fiction has been portraying embedded performance support systems and ambient intelligence for years in movies and TV shows like 2001 and Star Trek. In these shows, sensors and voice output are combined with overwhelming artificial intelligence to create an intelligent environmental "presence" that monitors the situation and offers support and warnings as necessary. Somehow they always seem to understand the questioner's intent perfectly (how many search engines can do that?) and gather their own data through sensors as well as direct input.

- When use of a job aid would damage credibility

- When speedy performance is a priority

- When novel and unpredictable situations are involved

- When smooth and fluid performance is a top priority

- When the employee lacks sufficient reading, listening, or reference skills

- When the employee is not motivated

In healthcare, it is often easier to speak or use gestures than to use your hands to look up information, partly because healthcare workers' hands are often busy with other things, and partly because of infection-control issues. Imagine if information displays could be projected wherever needed, with no-touch operation. Data is increasingly being handled in electronic form, but the usability of these systems often lags behind their data manipulation abilities, creating a temporary and possibly dangerous gap in human performance as well as opening up the usual opportunities afforded by the use of digital information instead of paper. All sorts of solutions are being proposed to deal with this issue, from usability improvements to novel interfaces, such as natural language processing

Similarly, an article in Health Informatics details the development of a a gesture-controlled medical image display system for use in operating rooms. Scan imagery is used for reference during neurosurgery by gloved surgeons who cannot touch anything but the sterile field and instruments with their hands. Currently nursing personnel are utilized for this task, holding bound volumes of images up for review by the surgeons during procedures and manually finding the correct image. Gesture-controlled interfaces were made famous by the movie Minority Report, but they are very real, and can even be constructed using commonly available components and open-source code.

The Minority Report gestural interface

Tom Cruise manipulates an advanced visual display system in Minority Report.

Image © 2002 DreamWorks LLC and Twentieth Century Fox.

Video of the Minority Report interface in action

The interfaces and "smart ecosystems" of tomorrow

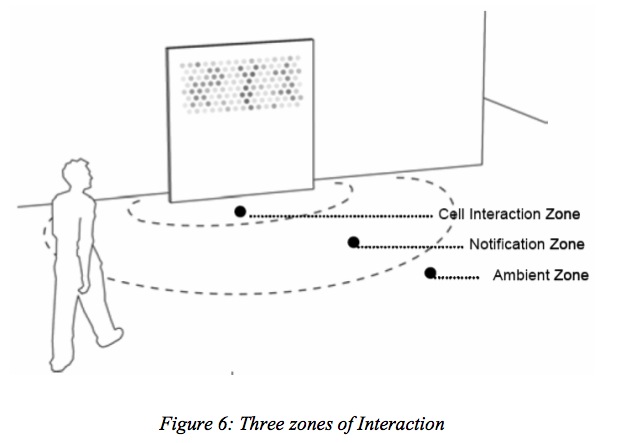

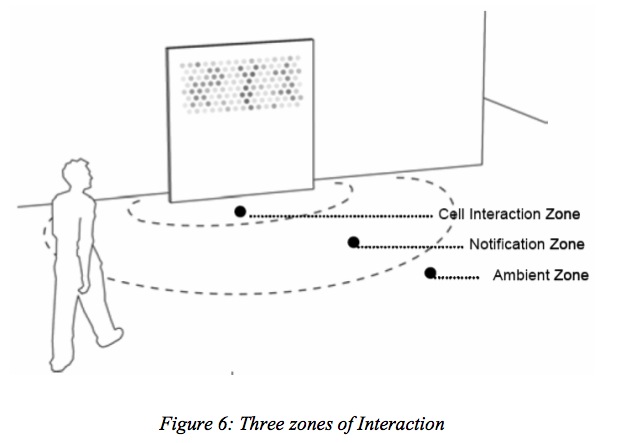

In a pervasive computing scenario, devices sense or communicate with people in order to provide contextually appropriate services. The interface is a key component in these scenarios since a primary goal is to make interaction with the devices as natural and intuitive as possible. An astonishing amount of creativity and innovation is being directed at the problem of interface design and the results will be life and work-transforming.

Some of the more interesting modes of human-computer interaction that have been tried include directed attention, voice, gesture tracking, motion (body motion through space or motion of the device, like the Wiimote or the Siftables shown below), haptic feedback-enhanced touch, augmented reality and even brain-computer interfaces. Some of the most exciting and innovative interfaces are shown below. With devices like these, I think we'll be reaching more tipping points in the workplace very soon.

Interactive Floor Projection Screens

These systems project images on the floor, and use a camera to track body motion across the surface. You may be familiar with these displays from seeing them at malls and theaters, but they could be used to select files, control other devices, etc.

This video shows an interactive floor installation at a Japanese art gallery, showing its use as an interface for retrieving information about works of art. For more information about building this type of interface, see the Natural User Interface Group for setup instructions and code.

Multitouch interfaces (like the one on the iPhone) are used in walls, tables, and smartPhones. Microsoft Surface, a multi-touch table that allows multi-user, fine-grained control of objects on screen, also interacts with real objects and other devices using cameras and wifi. This is a great example of "smart ecosystems" of intelligent devices which sense the status of the environment and lower barriers between people and their information. An example is shown transferring an image from a camera to the Surface table to a smartphone, simply by laying the camera and smartphone on the table.

Touch Wall

An intelligent whiteboard that uses cameras to track hand motion across a vertical display allowing zoomable, panning navigation through information in a non-linear format.

In February, 2009, Pattie Maes of MIT demonstrated a project called "Sixth Sense" led by Pranav Mistry which uses an inexpensive wearable and projector to enable amazing interactions between the real world and the world of data. Using natural gestures to interact with any surface, users can manipulate data, view information about products, people and ideas in the world around them. Th revolutionary feature is its ability to act as a Sixth Sense for metadata of real objects, through barcode or facial or product recognition, combined with realtime feedback to the user through projection.

Siftables are physical tiles which form a smart ecosystem for manipulating data, in which each tile can sense the nearby tiles and what is on them. Tiles can be programmed to recognize proximity to specific types of tiles, so for instance "face" tiles can change their expressions as they move around the table, closer or farther to other faces. The possibiiities for this type of interaction with real world data represented by avatars that can react to specific attributes are endless.

Interactive Window

An example of intelligent devices controlling ambient lighting and mood.

An installation in a Greek museum showing the use of novel interfaces in games.

Sony's Revolution

Smart tiles somewhat akin to David Merrill's concept. These tiles form a sort of object oriented grammar where one tile modifies the parameters of the subject of another tile.

Some innovative interface ideas from TAT

- ICT: Future and Emerging Technologies Open Scheme

- Ambient Agoras - Dynamic Information Clouds in a Hybrid World (IST-2000-25134)

- The Disappearing Computer

- The Disappearing Computer II (DC) Proactive Initiative

- Natural User Interface Group

- 10 Futuristic User Interfaces

- Future of Internet Search: Mobile version

- Ted Talk: Pattie Maes demo's the sixth sense

- Jeff Han demos his breakthrough touchscreen

- David Merrill: Augmented reality

- Garmin: Synthetic Vision Technology

- Red Laser iPhone app that scans barcodes with a free SDK so you can develop your own applications for the technology

- ScanCam, an iPhone app that scans and OCR's text from books, newspapers, magazines.

- NeoReader, an iPhone app that scans 2D barcodes that link to websites

Novel Interfaces applied to real-world Performance Support situations

- A Real-Time Gesture Interface for Hands-Free Control of Electronic Medical Records

- Pervasive adaptation (wikipedia): technologies used in information and communication systems which are capable of autonomously adapting to highly dynamic user contexts

- Artificial Intelligence for Engineering Design, Analysis and Manufacturing, Volume 23, , August 2009 pp 251-261 LINK

- Speech: From Radiology to the EMR

Ads by Google